Hello Toradex team,

We are testing iMX8QM PCIe bandwith to see if its sufficient for our testing suite.

The problem we are trying so solve is connecting a >1GBe network card.

This is the continuation of imx8-pcie-link-only-working-on-gen1.

The difference from previous post is that we now have x2 lanes on PCIEA.

//apalis-imx8_pcie-gen3-x2.dts

/dts-v1/;

/plugin/;

#include <dt-bindings/soc/imx8_hsio.h>

&pciea {

status = "okay";

hsio-cfg = <PCIEAX2PCIEBX1>;

num-lanes = <2>;

fsl,max-link-speed = <3>;

};

&sata {

status = "disabled";

};

The following tests are done on 2 seperate NICs.

(Intel) 10Gtek X520-10G-1S

(Aquantia) OWC OWCPCIE10GB

Intel card was connected using fibre and Aquantia card using CAT6 RJ45.

Results are captured using iperf3 average speeds after 10 second test with 5 parallel connections. Loopback is done by running the server and client on iMX8.

| Test Case | Intel | Aquantia |

|---|---|---|

| Up | 4.25Gb/s (iperf3 100%, CPU0 78%) | 4.38 Gb/s (iperf3 100%, CPU0 70%) |

| Down | 1.58Gb/s (iperf3 30%, CPU0 100%) | 1.20Gb/s (iperf3 36%, CPU0 100%) |

| Loopback Up | 6.52Gb/s (iperf3 85%, CPU0 50%) | 6.46Gb/s (iperf3 84%, CPU0 50%) |

| Loopback Down | 6.66Gb/s (iperf3 85%, CPU0 50%) | 6.89Gb/s (iperf3 84%, CPU0 50%) |

root@apalis-imx8-07307404:~# uname -a

Linux apalis-imx8-07307404 5.4.193-5.7.1-devel+git.f78299297185 #1 SMP PREEMPT Mon Jul 11 14:42:03 UTC 2022 aarch64 aarch64 aarch64 GNU/Linux

root@apalis-imx8-07307404:~# fw_printenv defargs

defargs=pci=pcie_bus_perf,nommconf,nomsi

Intel dmesg.

root@apalis-imx8-07307404:~# dmesg | grep pci

[ 0.000000] Kernel command line: pci=pcie_bus_perf,nommconf,nomsi root=PARTUUID=98821cdc-02 ro rootwait

[ 0.274715] imx6q-pcie 5f000000.pcie: 5f000000.pcie supply epdev_on not found, using dummy regulator

[ 1.790020] ehci-pci: EHCI PCI platform driver

[ 2.577932] imx6q-pcie 5f000000.pcie: 5f000000.pcie supply epdev_on not found, using dummy regulator

[ 2.595697] imx6q-pcie 5f000000.pcie: No cache used with register defaults set!

[ 2.726252] imx6q-pcie 5f000000.pcie: PCIe PLL locked after 0 us.

[ 2.945171] imx6q-pcie 5f000000.pcie: host bridge /bus@5f000000/pcie@0x5f000000 ranges:

[ 2.953227] imx6q-pcie 5f000000.pcie: IO 0x6ff80000..0x6ff8ffff -> 0x00000000

[ 2.960743] imx6q-pcie 5f000000.pcie: MEM 0x60000000..0x6fefffff -> 0x60000000

[ 3.068430] imx6q-pcie 5f000000.pcie: Link up

[ 3.172881] imx6q-pcie 5f000000.pcie: Link up

[ 3.177291] imx6q-pcie 5f000000.pcie: Link up, Gen2

[ 3.182424] imx6q-pcie 5f000000.pcie: PCI host bridge to bus 0000:00

[ 3.188863] pci_bus 0000:00: root bus resource [bus 00-ff]

[ 3.194593] pci_bus 0000:00: root bus resource [io 0x0000-0xffff]

[ 3.200805] pci_bus 0000:00: root bus resource [mem 0x60000000-0x6fefffff]

[ 3.207728] pci 0000:00:00.0: [1957:0000] type 01 class 0x060400

[ 3.213775] pci 0000:00:00.0: reg 0x10: [mem 0x00000000-0x00ffffff]

[ 3.220074] pci 0000:00:00.0: reg 0x38: [mem 0x00000000-0x00ffffff pref]

[ 3.226841] pci 0000:00:00.0: supports D1 D2

[ 3.231128] pci 0000:00:00.0: PME# supported from D0 D1 D2 D3hot

[ 3.243698] pci 0000:01:00.0: [8086:1557] type 00 class 0x020000

[ 3.249811] pci 0000:01:00.0: reg 0x10: [mem 0x00000000-0x0007ffff 64bit pref]

[ 3.257080] pci 0000:01:00.0: reg 0x18: [io 0x0000-0x001f]

[ 3.262722] pci 0000:01:00.0: reg 0x20: [mem 0x00000000-0x00003fff 64bit pref]

[ 3.269982] pci 0000:01:00.0: reg 0x30: [mem 0x00000000-0x0007ffff pref]

[ 3.276912] pci 0000:01:00.0: PME# supported from D0 D3hot

[ 3.282500] pci 0000:01:00.0: reg 0x184: [mem 0x00000000-0x00003fff 64bit pref]

[ 3.289843] pci 0000:01:00.0: VF(n) BAR0 space: [mem 0x00000000-0x000fffff 64bit pref] (contains BAR0 for 64 VFs)

[ 3.300189] pci 0000:01:00.0: reg 0x190: [mem 0x00000000-0x00003fff 64bit pref]

[ 3.307523] pci 0000:01:00.0: VF(n) BAR3 space: [mem 0x00000000-0x000fffff 64bit pref] (contains BAR3 for 64 VFs)

[ 3.318483] pci 0000:01:00.0: 8.000 Gb/s available PCIe bandwidth, limited by 5 GT/s x2 link at 0000:00:00.0 (capable of 32.000 Gb/s with 5 GT/s x8 link)

[ 3.352699] pci 0000:00:00.0: BAR 0: assigned [mem 0x60000000-0x60ffffff]

[ 3.359727] pci 0000:00:00.0: BAR 6: assigned [mem 0x61000000-0x61ffffff pref]

[ 3.366987] pci 0000:00:00.0: BAR 14: assigned [mem 0x62000000-0x620fffff]

[ 3.373908] pci 0000:00:00.0: BAR 15: assigned [mem 0x62100000-0x623fffff 64bit pref]

[ 3.381959] pci 0000:00:00.0: BAR 13: assigned [io 0x1000-0x1fff]

[ 3.388180] pci 0000:01:00.0: BAR 0: assigned [mem 0x62100000-0x6217ffff 64bit pref]

[ 3.395966] pci 0000:01:00.0: BAR 6: assigned [mem 0x62000000-0x6207ffff pref]

[ 3.403218] pci 0000:01:00.0: BAR 4: assigned [mem 0x62180000-0x62183fff 64bit pref]

[ 3.411004] pci 0000:01:00.0: BAR 7: assigned [mem 0x62184000-0x62283fff 64bit pref]

[ 3.418787] pci 0000:01:00.0: BAR 10: assigned [mem 0x62284000-0x62383fff 64bit pref]

[ 3.426645] pci 0000:01:00.0: BAR 2: assigned [io 0x1000-0x101f]

[ 3.432779] pci 0000:00:00.0: PCI bridge to [bus 01-ff]

[ 3.438042] pci 0000:00:00.0: bridge window [io 0x1000-0x1fff]

[ 3.444170] pci 0000:00:00.0: bridge window [mem 0x62000000-0x620fffff]

[ 3.450983] pci 0000:00:00.0: bridge window [mem 0x62100000-0x623fffff 64bit pref]

[ 3.458768] pci 0000:00:00.0: Max Payload Size set to 256/ 256 (was 128), Max Read Rq 256

[ 3.467260] pci 0000:01:00.0: Max Payload Size set to 256/ 512 (was 128), Max Read Rq 256

[ 3.476168] pcieport 0000:00:00.0: PME: Signaling with IRQ 565

Aquantia dmesg.

root@apalis-imx8-07307404:~# dmesg | grep pci

[ 0.000000] Kernel command line: pci=pcie_bus_perf,nommconf,nomsi root=PARTUUID=98821cdc-02 ro rootwait

[ 0.271244] imx6q-pcie 5f000000.pcie: 5f000000.pcie supply epdev_on not found, using dummy regulator

[ 1.756256] ehci-pci: EHCI PCI platform driver

[ 2.545351] imx6q-pcie 5f000000.pcie: 5f000000.pcie supply epdev_on not found, using dummy regulator

[ 2.567933] imx6q-pcie 5f000000.pcie: No cache used with register defaults set!

[ 2.693275] imx6q-pcie 5f000000.pcie: PCIe PLL locked after 0 us.

[ 2.912687] imx6q-pcie 5f000000.pcie: host bridge /bus@5f000000/pcie@0x5f000000 ranges:

[ 2.920813] imx6q-pcie 5f000000.pcie: IO 0x6ff80000..0x6ff8ffff -> 0x00000000

[ 2.928261] imx6q-pcie 5f000000.pcie: MEM 0x60000000..0x6fefffff -> 0x60000000

[ 3.036126] imx6q-pcie 5f000000.pcie: Link up

[ 3.140580] imx6q-pcie 5f000000.pcie: Link up

[ 3.145005] imx6q-pcie 5f000000.pcie: Link up, Gen3

[ 3.150140] imx6q-pcie 5f000000.pcie: PCI host bridge to bus 0000:00

[ 3.156582] pci_bus 0000:00: root bus resource [bus 00-ff]

[ 3.162324] pci_bus 0000:00: root bus resource [io 0x0000-0xffff]

[ 3.168535] pci_bus 0000:00: root bus resource [mem 0x60000000-0x6fefffff]

[ 3.175455] pci 0000:00:00.0: [1957:0000] type 01 class 0x060400

[ 3.181504] pci 0000:00:00.0: reg 0x10: [mem 0x00000000-0x00ffffff]

[ 3.187796] pci 0000:00:00.0: reg 0x38: [mem 0x00000000-0x00ffffff pref]

[ 3.194563] pci 0000:00:00.0: supports D1 D2

[ 3.198854] pci 0000:00:00.0: PME# supported from D0 D1 D2 D3hot

[ 3.211445] pci 0000:01:00.0: [1d6a:94c0] type 00 class 0x020000

[ 3.217595] pci 0000:01:00.0: reg 0x10: [mem 0x00000000-0x0007ffff 64bit]

[ 3.224430] pci 0000:01:00.0: reg 0x18: [mem 0x00000000-0x00000fff 64bit]

[ 3.231280] pci 0000:01:00.0: reg 0x20: [mem 0x00000000-0x003fffff 64bit]

[ 3.238101] pci 0000:01:00.0: reg 0x30: [mem 0x00000000-0x0001ffff pref]

[ 3.245100] pci 0000:01:00.0: supports D1 D2

[ 3.249384] pci 0000:01:00.0: PME# supported from D0 D1 D3hot D3cold

[ 3.255944] pci 0000:01:00.0: 15.752 Gb/s available PCIe bandwidth, limited by 8 GT/s x2 link at 0000:00:00.0 (capable of 31.506 Gb/s with 16 GT/s x2 link)

[ 3.291683] pci 0000:00:00.0: BAR 0: assigned [mem 0x60000000-0x60ffffff]

[ 3.298499] pci 0000:00:00.0: BAR 6: assigned [mem 0x61000000-0x61ffffff pref]

[ 3.305762] pci 0000:00:00.0: BAR 14: assigned [mem 0x62000000-0x625fffff]

[ 3.312663] pci 0000:01:00.0: BAR 4: assigned [mem 0x62000000-0x623fffff 64bit]

[ 3.320028] pci 0000:01:00.0: BAR 0: assigned [mem 0x62400000-0x6247ffff 64bit]

[ 3.327384] pci 0000:01:00.0: BAR 6: assigned [mem 0x62480000-0x6249ffff pref]

[ 3.334635] pci 0000:01:00.0: BAR 2: assigned [mem 0x624a0000-0x624a0fff 64bit]

[ 3.341987] pci 0000:00:00.0: PCI bridge to [bus 01-ff]

[ 3.347240] pci 0000:00:00.0: bridge window [mem 0x62000000-0x625fffff]

[ 3.354060] pci 0000:00:00.0: Max Payload Size set to 256/ 256 (was 128), Max Read Rq 256

[ 3.362564] pci 0000:01:00.0: Max Payload Size set to 256/ 512 (was 128), Max Read Rq 256

[ 3.371453] pcieport 0000:00:00.0: PME: Signaling with IRQ 565

We were wondering why the difference between upload and download is so huge.

Found few other articles about similar tests.

PCIe-Bandwidth Speed table

i-MX6Q-PCIe-EP-RC-Validation-System

When asked on NXP side of things they said the problem is the device connected to PCIe.

iMX8QM-PCIe-speed-troubleshooting

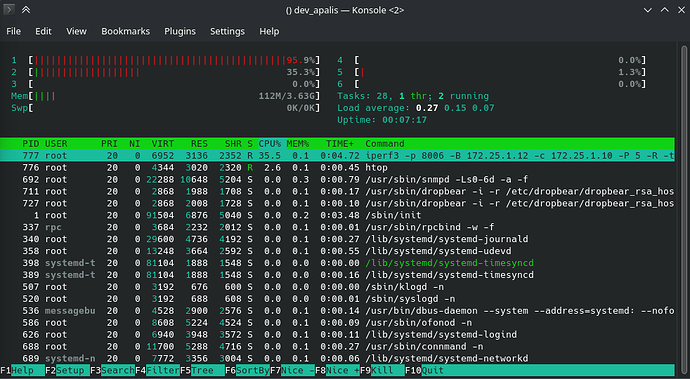

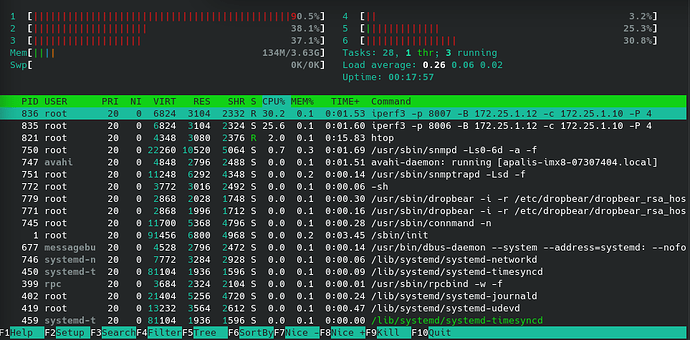

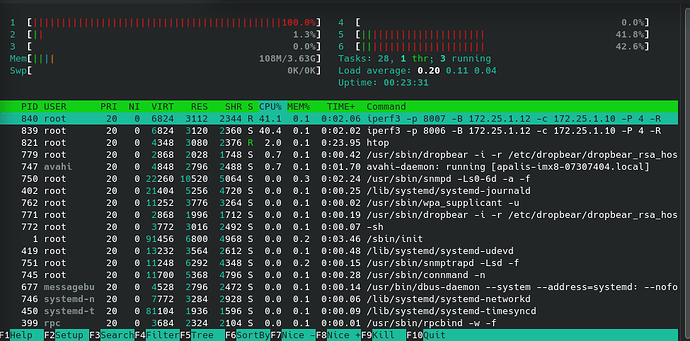

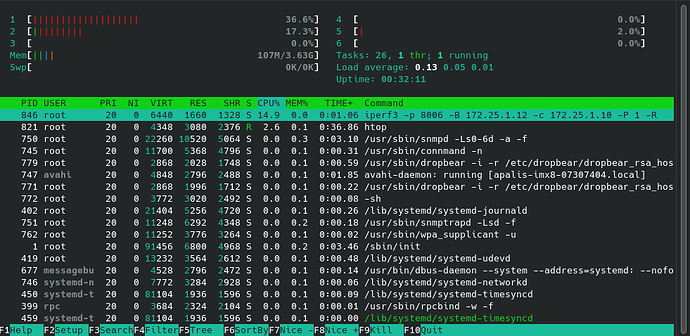

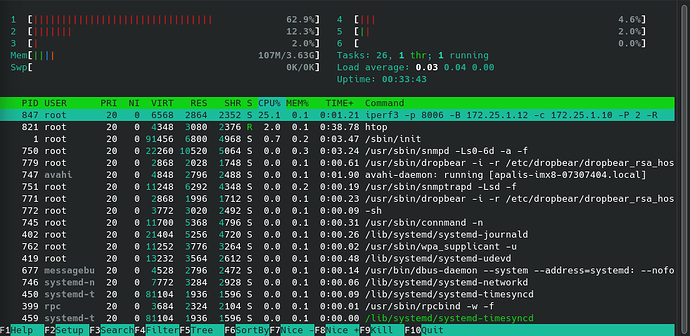

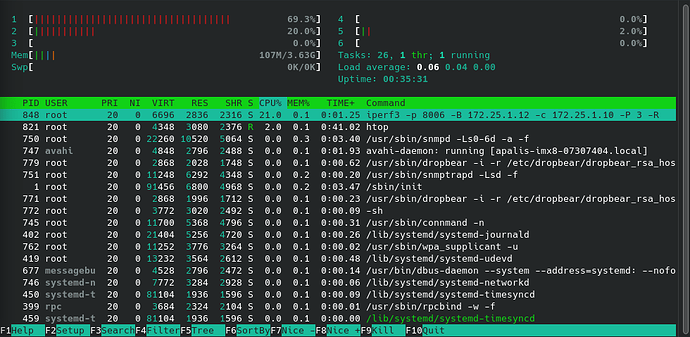

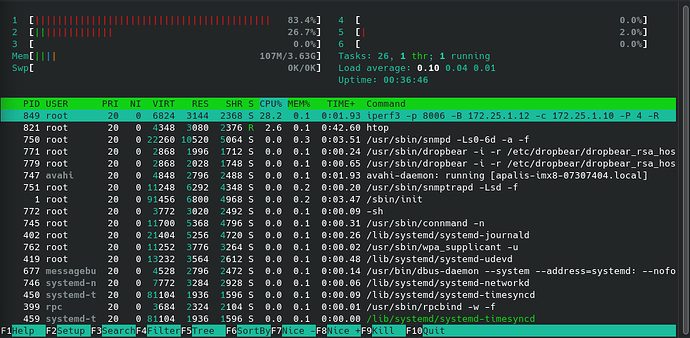

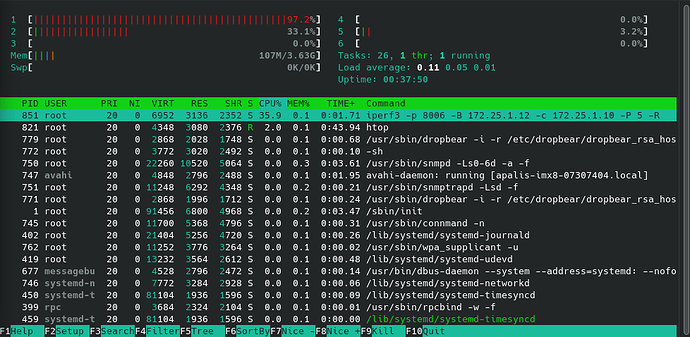

We also observed weird behaviour with CPU while doing these tests. Everytime iperf3 would start the CPU0 would be utilized or be maxed out while htop does not show any process that would match the CPU usage.

Its always CPU0. We tested that by setting affinity of iperf3 to CPU0 and it drasctically affected resulting speeds. CPU0 utilization was not shown in htop process list.

We are trying to increase download speed to atleast 2.5Gb/s. We also tried playing around with MTU size and it does help in case of upload but has no effect on download.

It appears that the CPU0 is limiting the transfer speed but we are unable to find out which process is using it.

Best regards,

Aleš