I’m trying to include an external library with my application. To be specific I’m using Crank Storyboard and I’m trying to include the greio lib. The VS Extension creates a docker container and I’m assuming the compiling and linking goes on within that docker container. How do I get the greio lib to be copied over to the the container so the linker can use it? I would assume i have to configure it somehow in the .\appconfig_0

Greetings @jeffbelz,

Since you want to use Crank Storyboard, may I suggest an alternative. The team at Crank has worked with us before to integrate their Storyboard software with Torizon and containers. More info on this here: Partner Demo Container - Crank Storyboard | Toradex Developer Center

The integration was done via their IDE/designer tooling. It might be possible to do this on Visual Studio as well, but it might be easier to leverage what Crank has already done. Also the Crank Team is pretty open to supporting customers using Torizon and they already have some experience with it. Meaning they should be able to help get any Crank based apps going.

Best Regards,

Jeremias

In general this is not really a crank issue, even through I’m using crank. There IDE does not set up external lib in visual studio. it only SCPs file over. There is a 2nd part to crank and that is using there IO library that allow the C/C++ program to access the GUI. It’s basicly a precompiled Lib with a header file. and it all comes in a folder. I need to know how to copy that folder over at build time over to the container so it can remote build. I was reading the " How to Import a C/C++ Application to Torizon" and came across the " ```

ENV LD_LIBRARY_PATH=“$LD_LIBRARY_PATH:/#%application.appname%#/lib”

but not explanation of what this does or how to use it. I’m assuming this copies the Lib path, but is $LD_LIBRARY_PATH a PC path or a linux path? In general how do I work with external lib with the Visual studio extension? I have a header file and a compiled Lib file to work with, but have no idea how to get them over to the container so it can build with it.

In the general case then the scenario would be similar to what you saw in the “How to Import a C/C++ Application to Torizon” article.

Though the difference is that in that article the external library had to be built from scratch then deployed. In your case it only really has to be deployed it seems. In the article the deployment is done via a custom task that deploys the built library to the appconfig_0/work/<application name> folder. This is sufficient since everything in this folder gets copied into the resulting container.

As for the LD_LIBRARY_PATH, this is just a standard Linux environment variable. It tells applications where they should look to find library files.

By the way just to clarify, are you using Visual Studio, or Visual Studio Code? You’ve been mentioning Visual Studio, but the article we are referencing is for Visual Studio Code. Just want to confirm, before we get confused.

Best Regards,

Jeremias

Clarify - I’m using Visual Studio 2019

Yes, I have a lib that is already compiled and it resides in a folder. For topic sake, lets call it “runtime/lib”

and a header file that uses it that resides in “runtime/include” I need to be able to copy the entire “runtime” folder over to the container. Does that mean I can place it into the “appconfig_0/work/” folder and that will copy it over?

For LD_LIBRARY_PATH=“$LD_LIBRARY_PATH:/#%application.appname%#/lib”

The $LD_LIBRARY_PATH evaluates to what? like /home/runtime/lib or C:\runtime\lib. I’m assuming it has to be the path that the container makes.

In the linker I add the -lgpio to the command, and give it a path. Since the build is happening on the container (which is linux via wsl) does the path have to be the linuix path?

There is a section to add additional include paths too. are those the PC path or the container linux path?

little update-

I used the buildcommads option to create a folder and then copy files into them

RUN mkdir -p /runtime

COPY runtime /runtime

I can see in the Dockerfile.debug it does add them to the file[Dockerfile.debug|attachment]

And I have the files I want to copy in the “appconfig_0” folder. Any where else it would create an error.

I was expecting it to make a dir called “runtime” and copy the sub folder into it. I tried these commands in a separate Dockerfile that I created from the command line and it worked fine. But it doesn’t work with the Dockerfile in VS. Got any ideas. I feel we are close on this one. Maybe not ![]() -)

-)

(upload://1moRQQSQ6Nn0es5u44FJo8IWqi7.debug) (1.8 KB)

More update -

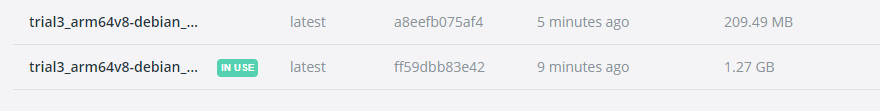

I did some digging and if I look at the docker images that were created by the extension it creates a bunch, but 2 of them stick around, shown in the picture. the interesting thing is:

- the 209MB one has my runtime folder, so the COPY command is working

- the 1.27GB (which is created first) does not have it.

- If I cli into the 1.27GB container while the other packages are running and installing , I do see my runtime folder, but it gets deleted before the process end. Any ideas why? is it suppose to work that way?

Also, I think I found a bug. in the appconfig0 window in VS the “buildcommands” and “buildfiles” get placed in reverse order when it hits the Dockerfile.

Example - buildcommand was RUN mkdir -p /runtime

- buildfiles was COPY runtime /runtime

When I looked at the debug file it printed:

COPY runtime /runtime

RUN mkdir -p /runtime

Which obviously was not going to work. Seems like a bug, but I could be doing something wrong

Hi @jeffbelz ,

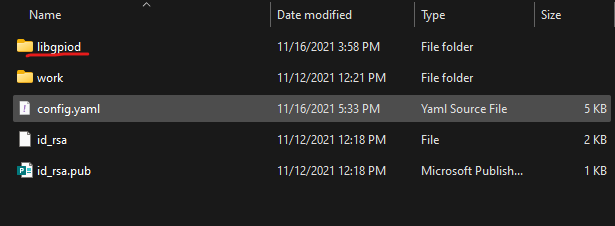

try following these steps (using a copy of libgpiod as example):

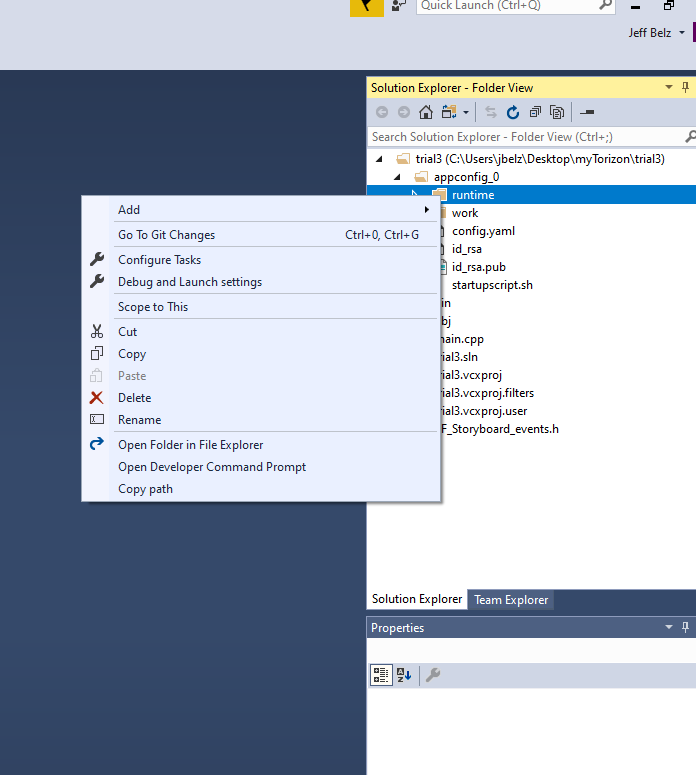

1- copy the folder with the external library and headers to the appconfig_0 folder:

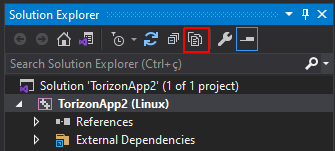

2- On visual studio at solution explorer select “show all files”:

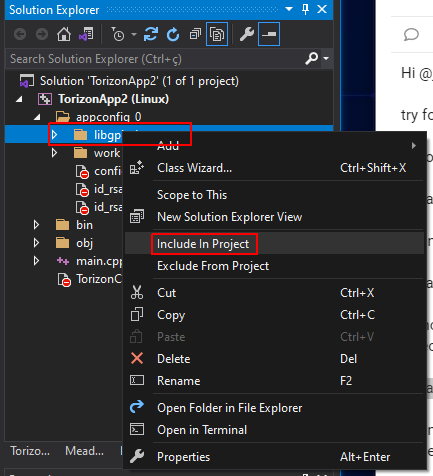

3- Include the content of the folder to the project. Right click on the folder or files and select “include in project”

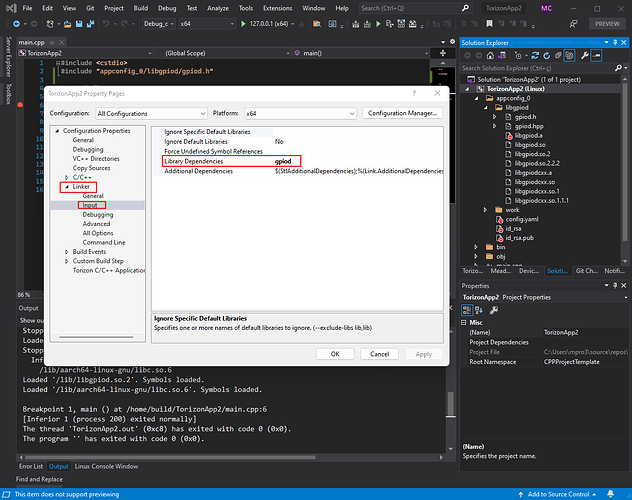

4- On the application properties go to the “Linker → Input” and set the “Library Dependencies”:

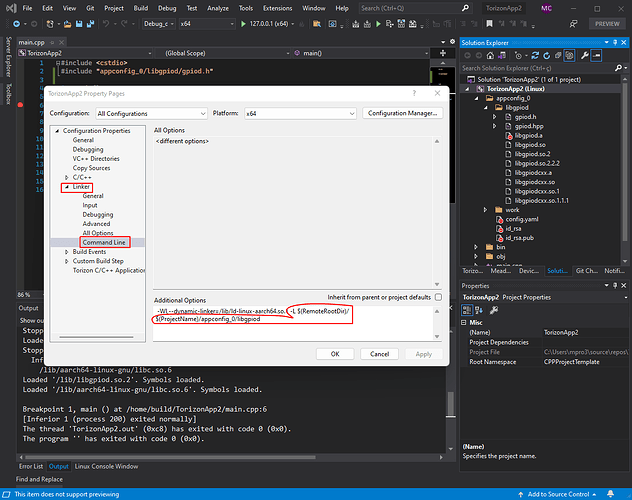

5- Yet on application properties go to the “Linker → Command Line” and add to the “Additional Options” the follow:

-L $(RemoteRootDir)/$(ProjectName)/appconfig_0/<changeME>

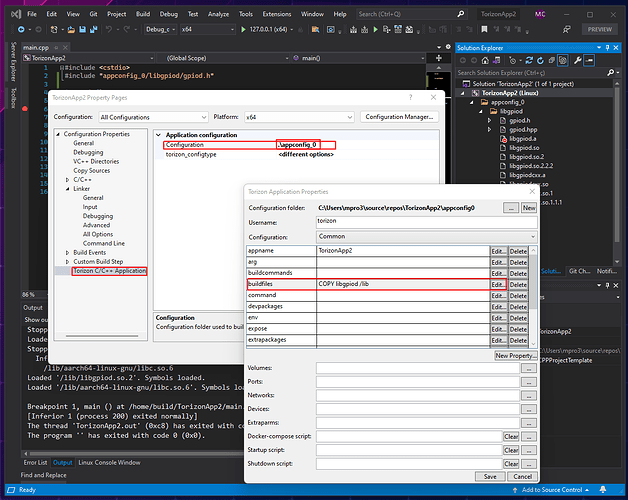

6- Yet on application properties goto the “Torizon C/C++ Application” and edit the Configuration “.\appconfig_0 ->properties” setting the buildfiles to:

COPY <changeME> /lib

Save and apply the changes, you should be able to use your external library.

I was looking into doing this today and found out I don’t have an option to “include In Project” I’m wondering if this is a bug in the extension. What version of VS are you running and and what version of the extention?

Hold tight I think I found how the add new folder option.

Update -

It seems to work except the COPY instruction does not work. I added each folder and then I had to add each file. It’s pain, but I got it to work. I’ll call that a win for now ![]()

Glad a method was found that works for your use case.

Best Regards,

Jeremias

Hey @jeffbelz

unfortunately we have the same problem. Thanks for your groundwork!

Did you get the project up and running?

We are still struggeling with missing .so files.

Hi @billi1234,

Is your use case the same one as described in this thread?

Also could you describe the exact issue you’re having as well as the steps you’re taking. It sounds like you’re trying to add external *.so files but some aren’t making it into the final build?

Best Regards,

Jeremias

I did get the project running, but I actually switched over to VS code. I kept having issues with Studio like the intellisense would just stop working. Also, my company switched to VS2022 and the extension is not available for that yet. The biggest issue is with Studio you can’t add you entire folder structure to the solution explorer. This is key for a remote docker project because all files in the solution get copied to the docker container. VS code however you can add an entire folder. in my case the Crank storyboard SDIO lib. And I have little issues after I switched. I fought tooth and nail not to switch to VS code, because it’s very command line focused and those JSON set files are cryptic and very had to understand.

As for the Studio extension it still has bugs. For example you can’t bind a folder location or do COPY commands that you can do in a docker file. The extension actually makes 2 containers for some reason. The first one it does the COPY and folder binding, but later on in the creation of the compile sequence it makes another larger container which is the actual container it uses for debug, but the COPY and folder binding are no longer there. So if you mapped your lib folder…it won’t be copied to the container. Super frustrating.

You might be like me and super frustrated with the addition of docker. It has made my development a living nightmare. I suspect most embedded linux applications don’t need docker in anyway because the end use is for a specific device. The is no need for the complexity of docker. I would love if toradex would have a version of torizon that does not have docker. The GPIO lib and the support for other peripherals like UART and I2C along with absolutely awesome support and documentation is why I like Toradex. It’s really is a great company and good products. For now I would highly suggest you switch to VS code…not my favorite of IDE, but for torizon and docker containers it seems to be more stable.

@jeffbelz

thank you for the detailed answer. That brings us further!

Hi @jeffbelz,

Could you elaborate on your frustration with Docker?

We’d like to always be able to improve our product based on customer feedback. So it’d be appreciated if you could give more detailed feedback. Also regarding your statement here:

I would love if toradex would have a version of torizon that does not have docker.

This is what our Embedded Linux BSP Reference images are essentially: BSP Layers and Reference Images for Yocto Project | Toradex Developer Center

Which you can try, but the user experience is generally less friendly. Especially if one is not use to working with the Yocto build system.

Best Regards,

Jeremias

Jeremais:

My use cases for Linux is usually a user device that does x y or z. Like, a machine that dispenses pills, or a machine that produces ice-cream and then they connect to a cloud server. Basic IoT stuff with reading sensors, controlling motors, and controlling a fancy display. I use GPIO, I2C, SPI, UART, ADC, Timers, USB in some shape of form in every project I do. Since, these are dedicated devices I really only need one OS. Debian suits my needs just fine. I just don’t need to add another layer to the mix to make portability from one OS to another easier. Hence, docker is overkill for what I do. The issues I see with docker is it’s in ability to save the container. You have to create a brand new image every time you add something you want to keep like a new deamon or application. Another thing is you have redundant applications between containers. For example, container 1, 2, and 3 may all have “apt” or “nano” installed. not a huge deal, but a little in efficient. Remote debugging. This is were docker makes things a lot harder. If you use a PC you have to have docker with linux installed so the application can compile. We already saw how complex using external lib got. Making sure all files get copied to temporary container , plus binding folders to save data, then deploying remotely creates another container on another system. So much simpler to with out docker containers. There is an advantage to using docker for larger systems and servers. I can see Web guys loving docker. It’s got it’s place and advantage is some applications, but for device specific application like mine all it’s doing is adding complexity and I’ll never be take advantage of dockers architecture simple because I don’t have a need for it. Docker is like a swiss army knife, but all I need is a simple screw driver. I’ll play around with the Reference Images for Yacto. I’ve got some down time before I really have to get back on my project. Maybe, it’s what I’m looking for. Really, if I can remote debug and use the GPIO lib. I’m prob good.

You’re welcome to try our reference images. Just keep in mind the images are provided strictly as a reference. They OS will most likely need to be customized to suit any specific needs/requirements. Which means adding any needed libraries or packages to the base OS if they are not present by default.

As for your feedback I have some questions/comments if you don’t mind:

You have to create a brand new image every time you add something you want to keep like a new deamon or application.

Just to understand is the criticism that if you need to constantly rebuild the container image with every addition? For Yocto this wouldn’t be that much different. Except now you have to build the entire OS image because you wanted to add a library.

Another thing is you have redundant applications between containers. For example, container 1, 2, and 3 may all have “apt” or “nano”

You could architect your container builds so that common elements are in a single base container to reduce duplication. For example if you have base container A, then create containers B & C which use A as a base. If you run containers B & C on a system, Docker is smart enough to not duplicate the common base that both use.

Best Regards,

Jeremias