Hi Kevin, thank you.

I’m working on a second PC today and i don’t have the error 18, so i will try your solution on the pc that fail at home further.

Buthere, on my companie machine, i’m having an issue in the same spirit :

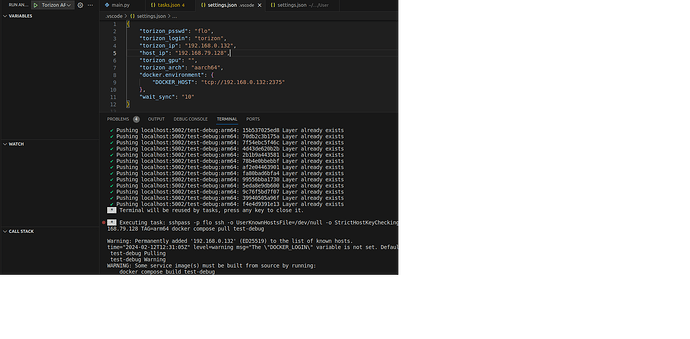

* The terminal process "sshpass '-p', 'flo', 'ssh', '-o', 'UserKnownHostsFile=/dev/null', '-o', 'StrictHostKeyChecking=no', 'torizon@192.168.0.132', 'LOCAL_REGISTRY=192.168.79.128 TAG=arm64 docker compose pull test-debug'" terminated with exit code: 18.

* Terminal will be reused by tasks, press any key to close it.

* Executing task: pwsh -nop .conf/validateDepsRunning.ps1

⚠️ VALIDATING ENVIRONMENT

✅ Environment is valid!

* Terminal will be reused by tasks, press any key to close it.

* Executing task: bash -c [[ ! -z "192.168.0.132" ]] && true || false

* Terminal will be reused by tasks, press any key to close it.

* Executing task: bash -c [[ "aarch64" == "aarch64" ]] && true || false

* Terminal will be reused by tasks, press any key to close it.

* Executing task: [ $(/bin/python3 -m pip --version | awk '{print $2}' | cut -d'.' -f1) -lt 23 ] && /bin/python3 -m pip install --upgrade pip || true

* Terminal will be reused by tasks, press any key to close it.

* Executing task: /bin/python3 -m pip install --break-system-packages -r requirements-debug.txt

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: debugpy in /home/flo/.local/lib/python3.10/site-packages (from -r requirements-debug.txt (line 1)) (1.8.1)

* Terminal will be reused by tasks, press any key to close it.

* Executing task: sleep 10

* Terminal will be reused by tasks, press any key to close it.

* Executing task: sshpass -p flo scp -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no /home/flo/test1/docker-compose.yml torizon@192.168.0.132:~/

Warning: Permanently added '192.168.0.132' (ED25519) to the list of known hosts.

* Terminal will be reused by tasks, press any key to close it.

* Executing task: DOCKER_HOST=192.168.0.132:2375 docker image prune -f --filter=dangling=true

Total reclaimed space: 0B

* Terminal will be reused by tasks, press any key to close it.

* Executing task: if [ false == false ]; then DOCKER_HOST=192.168.0.132:2375 docker compose -p torizon down --remove-orphans ; fi

Warning: No resource found to remove for project "torizon".

* Terminal will be reused by tasks, press any key to close it.

* Executing task: DOCKER_HOST= docker compose build --pull --build-arg SSHUSERNAME= --build-arg APP_ROOT= --build-arg IMAGE_ARCH=arm64 --build-arg SSH_DEBUG_PORT= --build-arg GPU= test-debug

WARN[0000] The "DOCKER_LOGIN" variable is not set. Defaulting to a blank string.

[+] Building 1.1s (15/15) FINISHED docker:default

=> [test-debug internal] load build definition from Dockerfile.debug 0.0s

=> => transferring dockerfile: 3.02kB 0.0s

=> [test-debug internal] load metadata for docker.io/torizon/debian:3.2.1-bookworm 0.9s

=> [test-debug internal] load .dockerignore 0.0s

=> => transferring context: 117B 0.0s

=> [test-debug 1/10] FROM docker.io/torizon/debian:3.2.1-bookworm@sha256:c645d6bc14f7d419340df0be25dbbe115ada029fa2e502a1c9149f335c 0.0s

=> [test-debug internal] load build context 0.0s

=> => transferring context: 157B 0.0s

=> CACHED [test-debug 2/10] RUN apt-get -q -y update && apt-get -q -y install openssl openssh-server rsync file 0.0s

=> CACHED [test-debug 3/10] RUN apt-get -q -y update && apt-get -q -y install && apt-get clean && apt-get autoremove && 0.0s

=> CACHED [test-debug 4/10] RUN python3 -m venv /.venv --system-site-packages 0.0s

=> CACHED [test-debug 5/10] COPY requirements-debug.txt /requirements-debug.txt 0.0s

=> CACHED [test-debug 6/10] RUN . /.venv/bin/activate && pip3 install --upgrade pip && pip3 install --break-system-packages -r 0.0s

=> CACHED [test-debug 7/10] COPY .conf/id_rsa.pub /id_rsa.pub 0.0s

=> CACHED [test-debug 8/10] RUN mkdir /var/run/sshd && sed 's@session\s*required\s*pam_loginuid.so@session optional pam_loginui 0.0s

=> CACHED [test-debug 9/10] RUN rm -r /etc/ssh/ssh*key && dpkg-reconfigure openssh-server 0.0s

=> CACHED [test-debug 10/10] COPY --chown=: ./src /src 0.0s

=> [test-debug] exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:0d8b3fc72a821b5baccc481970f6446ed2675194ee03c03659e074f2a5d143a4 0.0s

=> => naming to localhost:5002/test-debug:arm64 0.0s

* Terminal will be reused by tasks, press any key to close it.

* Executing task: DOCKER_HOST= docker compose push test-debug

WARN[0000] The "DOCKER_LOGIN" variable is not set. Defaulting to a blank string.

[+] Pushing 19/19

✔ Pushing localhost:5002/test-debug:arm64: c1e892f438c8 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: 7c21bbe332f8 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: 994ad98e0fc4 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: 3540ca7728a9 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: d93d8860ee31 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: d83df379f505 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: 15b537025ed8 Layer already exists 0.0s

✔ Pushing localhost:5002/test-debug:arm64: 70db2c3b175a Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 7f54ebc5f46c Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 4d43de620b2b Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 2b1b9a443581 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 78b4e0bbebbf Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: af2e04463901 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: fa80bad6bfa4 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 99556bba1730 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 5eda8e9db600 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 9c76f5bd7f07 Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: 39940505a96f Layer already exists 0.1s

✔ Pushing localhost:5002/test-debug:arm64: f4e4d9391e13 Layer already exists 0.1s

* Terminal will be reused by tasks, press any key to close it.

* Executing task: sshpass -p flo ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no torizon@192.168.0.132 LOCAL_REGISTRY=192.168.79.128 TAG=arm64 docker compose pull test-debug

Warning: Permanently added '192.168.0.132' (ED25519) to the list of known hosts.

time="2024-02-12T12:31:05Z" level=warning msg="The \"DOCKER_LOGIN\" variable is not set. Defaulting to a blank string."

test-debug Pulling

test-debug Warning

WARNING: Some service image(s) must be built from source by running:

docker compose build test-debug

1 error occurred:

* Error response from daemon: Get "http://192.168.79.128:5002/v2/": dial tcp 192.168.79.128:5002: connect: network is unreachable

* The terminal process "sshpass '-p', 'flo', 'ssh', '-o', 'UserKnownHostsFile=/dev/null', '-o', 'StrictHostKeyChecking=no', 'torizon@192.168.0.132', 'LOCAL_REGISTRY=192.168.79.128 TAG=arm64 docker compose pull test-debug'" terminated with exit code: 18.

* Terminal will be reused by tasks, press any key to close it.

I’m a bit confused between the several ip between the remote AM62 board where a docker run, my host machin on windows running my VM Linux etc…

I can also see port 5002, but in my settings. json file port 2375 is used.

NOTE : I had to add in settings.json the wait_sync with value of 10sec ( arbitrary value) else, i has a build error. May be this is a bug known by toradex ?

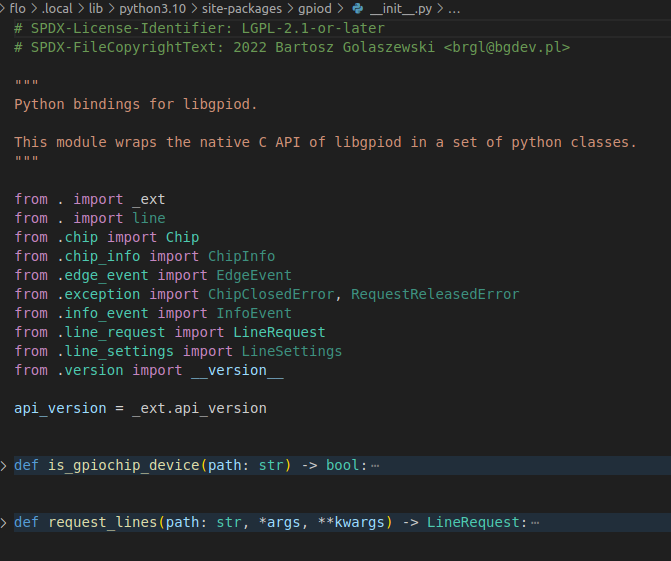

I’m using the simple container python project.

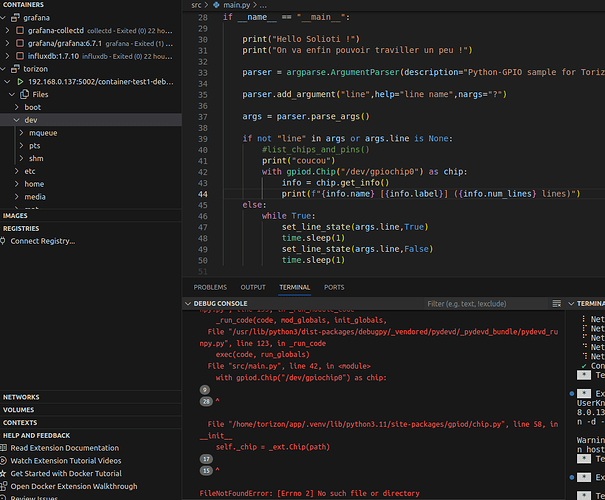

The error is :

Error response from daemon: Get “http://192.168.79.128:5002/v2/”: dial tcp 192.168.79.128:5002: connect: network is unreachable

But some line are pointing the DOCKER_LOGIN variable not set . Is there something to do with that normally ?

Thanks a lot for your help, i think i’m almost to the point to see ‘Hello’ in my terminal, i can’t wait for it , embeeded is not a straight forward adventure

![]()

![]() ) the tutorial to deploy a hello world simple python script.

) the tutorial to deploy a hello world simple python script.![]()