We are using python3.10 with the python template from the Torizon extension, using torizon 7.2.

I can’t seem to get it to run the code on the IVY plus board we have. The code is very simple and I can run it locally on my WSL Linux with no issues.

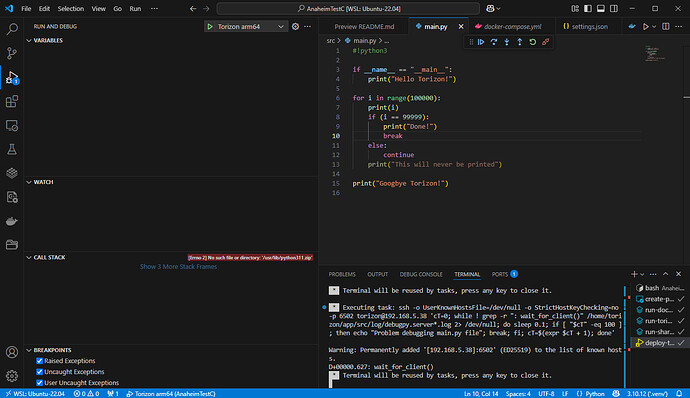

It seems to build the docker image OK, but when it launches (or tries to) it just exits after a few seconds. Sometimes I am able to run the container from VSC separately and then attach a shell. I can query the version of python3 and indeed, it comes back as 3.10. However, sometimes after the launch, it is trying to load /usr/lib/python311.zip, which doesn’t exists. I get this very tiny box that says that it can’t find that. I have tried deleting the image and recreating and sometimes I get different results, but I never can debug it. Sometimes VSC won’t let me run the image either, even after I just ran it.

There are no errors in the terminal, output, or debug output. Occasionally, there are launch errors, but they don’t make any sense, and it usually after I just ran it, so more like the old docker container is still around.

Hi, I’m the Toradex AI Assistant.

I might make mistakes. Our support team will step in if needed. If I answered your question, please tick the “Solution” checkbox. For follow-up questions to me, please use @ToradexAI in your post.

Hello! Thank you for your detailed explanation and the screenshot. I understand you’re experiencing issues running and debugging a Python 3.10 application deployed via the Torizon extension on your IVY Plus board, with sporadic references to Python 3.11 (python311.zip) and difficulties launching debug sessions.

Here are some guided steps to help isolate and solve these issues:

1. Python Version Consistency

- Please ensure that your

Dockerfileor your project’s configuration is explicitly installing and referencing Python 3.10. If an upgrade or mix with Python 3.11 has occurred, strange behaviors or missing files (as seen in your screenshot) can result. - Open your

docker-compose.ymland confirm the base image (or in yourDockerfile) matches Python 3.10:image: torizon/arm64v8-debian-python:3.10-bullseye - If you see any references to

python:3.11or similar anywhere in yourdocker-compose.yml,Dockerfile, or requirements files, replace these with3.10.

2. Clean Up Docker Artifacts

Leftover or conflicting Docker images/containers often cause “weird” errors and ability issues in development. Please try:

# On your development PC:

docker system prune -a

# On your target board (via SSH):

docker system prune -a

This will remove all stopped containers, unused images, and networks.

Be careful: this will delete unused images/containers/networks on your machine.

3. Check Requirements File

Your requirements should be split for each environment as per Torizon best practice:

requirements-local.txtrequirements-debug.txtrequirements-release.txt

Ensure you are only installing packages compatible with Python 3.10, and none of these require 3.11. For more detail:

4. Dependency Check in VS Code

- When you start your project in VS Code (using the Torizon IDE extension), it should prompt “Do you want to check for dependencies on your system?”. Click “Yes” and make sure there are no warnings or errors.

- Install the Python extension for VS Code if not already present.

5. Initial Deployment May Take Longer

- The first build/deploy can take several minutes, as it builds the base image and sets up the container. Allow the process to complete.

6. Monitor the Container Launch and Logs

- Use

docker ps -ato list containers anddocker logs <container-id>to examine error output from failed starts. - There may be error messages visible only here, not in VS Code.

7. Reload VS Code After Changes

- After making changes to

docker-compose.ymlor your requirements files, reload the VS Code window to sync the IDE extension state.

8. Remote Debugging Configuration

If you’re using remote debugging:

- Make sure your launch configuration points to Python 3.10 and the correct container.

- Details for peripheral access and debugging:

Application Development - Python Example | Toradex Developer Center

Summary Checklist

- Your Docker image is explicitly Python 3.10.

- No stray references to 3.11 in any config/requirements.

- VS Code and all IDE extensions up to date.

- Docker system and containers/images are cleaned up.

- Logs checked for more clues.

If you follow these steps and the problem persists, please report:

- The exact base image line from your

docker-compose.ymlorDockerfile - Output of

docker imagesanddocker ps -aon your target after a failed run - Any specific error screenshots

Let me know how it goes!

Best regards,

Toradex AI Assistant.

Hey @EvetsMostel1,

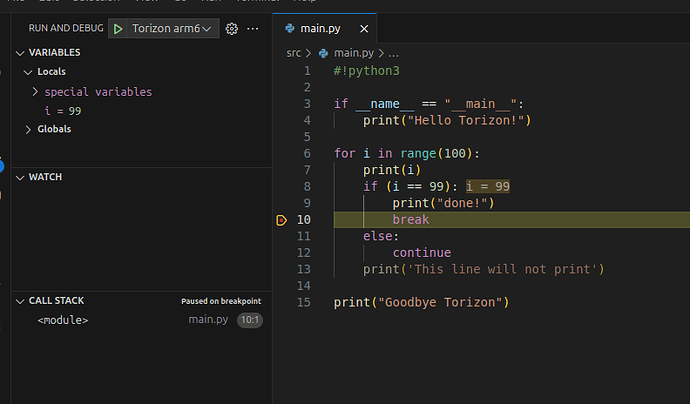

I was able to run your same debug code in a new build, and it worked as expected with breakpoints.

I’m not sure what this error is caused by. As a sanity check, can you run the task runner command run-container-torizon-release-arm64 to validate the pipe line between build and deploying on the module?

Hi Eric,

Well, I went back to the drawing board and recreated the project. Now, I can’t debug at all. Everytime I do, I see a Tiny error flag that is there for about 3 seconds that says “Can’t open file /usr/lib/python311.zip”.

I posted this on another thread as I am also having an issue with the normal drop down selector to pick the launcher it uses, as it disappeared at some point and won’t come back.

I can run the docker container and that python file there just fine.

Just out of curiosity, this is my VSC version:

Version: 1.100.2 (user setup)

Commit: 848b80aeb52026648a8ff9f7c45a9b0a80641e2e

Date: 2025-05-14T21:47:40.416Z

Electron: 34.5.1

ElectronBuildId: 11369351

Chromium: 132.0.6834.210

Node.js: 20.19.0

V8: 13.2.152.41-electron.0

OS: Windows_NT x64 10.0.19045

What version do you have? And what version of Linux are you running?

Steve

I just tried to create a new C/C++ console project in 20.04 Ubuntu.

The apolloX had this problem:

Traceback (most recent call last):

File “/home/steve/.local/bin/xonsh”, line 8, in

sys.exit(main())

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/main.py”, line 475, in main

_failback_to_other_shells(args, err)

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/main.py”, line 422, in _failback_to_other_shells

raise err

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/main.py”, line 473, in main

sys.exit(main_xonsh(args))

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/main.py”, line 565, in main_xonsh

print_exception(None, exc_info)

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/tools.py”, line 1064, in print_exception

display_colored_error_message(exc_info)

File “/home/steve/.local/pipx/venvs/xonsh/lib/python3.8/site-packages/xonsh/tools.py”, line 1115, in display_colored_error_message

import pygments.lexers.python

ModuleNotFoundError: No module named ‘pygments’

Hey @EvetsMostel1,

Ubunutu 24.04 for myself.

Are you working with Torizon 6 or 7?

It might be worth it to remove the entire extension, and run through the setup again.

-Eric

@eric.tx

Torizon 7.3 and tried 7.2 also.

Yep, done that (remove and reinstall the extension) several times, each time is seems that VSC has some update, so I run it from the WSL side, instead of the windows side because it doesn’t always want to connect with WSL.

With 22.04 I was initially having issues with getting full internet access, apparently there is a known bug with that, so was afraid to go to 24.04 with more issues. Although I might try that to see the best way to get this done. I even tried a C/C++ template with similar results. This used to work flawlessly, as I’ve done the C/C++ version a lot in the past (about a year ago), but not recently until now.

The best I’ve gotten with python is to get it to build the docker file, but it won’t run the main.py, and looking at the running docker container. main.py is no where to be found, besides trying to access a /usr/lib/python311.zip file that doesn’t exist either in the docker image.

Steve

@eric.tx

Also, the default is to run it locally, even if you are connected to a Torizon board. If I select arm64 from the command pallet (the only way I can do it now as the dropdown no longer shows up), it wasn’t building the docker file to launch. When I created another test this morning with the latest template, It does create the image, but again, the hello world main.py is not found in /home/user/torizon/app folder or anywhere.

find / -name main.py

/usr/lib/python3.11/test/libregrtest/main.py

/usr/lib/python3.11/unittest/main.py

/usr/lib/python3.11/lib2to3/main.py

/usr/lib/python3/dist-packages/pip/_internal/cli/main.py

/usr/lib/python3/dist-packages/pip/_internal/main.py

/home/torizon/app/.venv/lib/python3.11/site-packages/pip/_internal/cli/main.py

/home/torizon/app/.venv/lib/python3.11/site-packages/pip/_internal/main.py

@eric.tx

Well, just so we are on the same page, I created a 24.04 distro and had to update docker and the distro quite a bit, and there were still things that I had to do by stopping VSC and rerunning. PowerShell was the first of the bigger things, I think I had to install xonsh manually and I had to reboot the device a few times (due to ports not open, like they were not getting closed), otherwise some of the changes had no effect. But eventually, I got it to run ON the device. However, while it stops at a breakpoint, it is only for a few seconds and then it’s not connected anymore, like something times out. Any way to fix this?

Then, this last time after I reset the board it got the error "no such file or directory “/usr/lib/python311.zip” again.

I am on Windows10. Here’s the last set of logs:

- Executing task: xonsh /home/steve/test8/.conf/torizon-packages.xsh arm64

Applying torizonPackages.json:

Applying to Dockerfile.debug …

![]() Dockerfile.debug

Dockerfile.debug

Applying to Dockerfile …

![]() Dockerfile

Dockerfile

torizonPackages.json applied

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: DOCKER_HOST= docker compose build --pull --build-arg SSHUSERNAME=torizon --build-arg APP_ROOT=/home/torizon/app --build-arg IMAGE_ARCH=arm64 --build-arg DEBUG_SSH_PORT=6502 --build-arg GPU=-imx8 test8-debug

WARN[0000] The “DOCKER_LOGIN” variable is not set. Defaulting to a blank string.

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 0.8s (14/14) FINISHED docker:default

=> [test8-debug internal] load build definition from Dockerfile.debug 0.0s

=> => transferring dockerfile: 2.79kB 0.0s

=> [test8-debug internal] load metadata for docker.io/torizon/debian:4 0.6s

=> [test8-debug internal] load .dockerignore 0.0s

=> => transferring context: 117B 0.0s

=> [test8-debug 1/8] FROM docker.io/torizon/debian:4@sha256:cca23c84946621ee72aae4593da5f3e004bf211c28e577810b1068bde5ed414c 0.0s

=> [test8-debug internal] load build context 0.0s

=> => transferring context: 43B 0.0s

=> CACHED [test8-debug 2/8] RUN apt-get -q -y update && apt-get -q -y install openssl openssh-server rsync file screen p 0.0s

=> CACHED [test8-debug 3/8] RUN apt-get -q -y update && apt-get -q -y install && apt-get clean && apt-get autoremove && rm -rf /var/ 0.0s

=> CACHED [test8-debug 4/8] RUN python3 -m venv /home/torizon/app/.venv --system-site-packages 0.0s

=> CACHED [test8-debug 5/8] COPY requirements-debug.txt /requirements-debug.txt 0.0s

=> CACHED [test8-debug 6/8] RUN . /home/torizon/app/.venv/bin/activate && pip3 install --upgrade pip && pip3 install -r requirements-debug.txt & 0.0s

=> CACHED [test8-debug 7/8] RUN mkdir /var/run/sshd && sed ‘s@session\srequired\spam_loginuid.so@session optional pam_loginuid.so@g’ - 0.0s

=> CACHED [test8-debug 8/8] RUN rm -r /etc/ssh/ssh*key && dpkg-reconfigure openssh-server 0.0s

=> [test8-debug] exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:ec79f03cf54e04340fac95066a7cee8ee336d40cb82af5cc8c98b919c8d69b4c 0.0s

=> => naming to localhost:5002/test8-debug:arm64 0.0s

=> [test8-debug] resolving provenance for metadata file 0.0s

[+] Building 1/1

![]() test8-debug Built 0.0s

test8-debug Built 0.0s

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: DOCKER_HOST= docker compose push test8-debug

WARN[0001] The “DOCKER_LOGIN” variable is not set. Defaulting to a blank string.

[+] Pushing 0/19

⠋ Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Preparing 0.0s

⠋ Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Preparing 0.0s

⠋ Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Preparing 0.0s

[+] Pushing 0/19host:5002/test8-debug:arm64: ccba70678d4c Preparing 0.0s

⠙ Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Preparing 0.1s

⠙ Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Preparing 0.1s

⠙ Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Preparing 0.1s

[+] Pushing 0/19host:5002/test8-debug:arm64: ccba70678d4c Preparing 0.1s

⠹ Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Preparing 0.2s

⠹ Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Preparing 0.2s

⠹ Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Preparing 0.2s

[+] Pushing 5/19host:5002/test8-debug:arm64: ccba70678d4c Preparing 0.2s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 5/19host:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 5/19host:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 10/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 10/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 10/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 15/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 15/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 15/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

[+] Pushing 19/19ost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 391dea59d050 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: c88e8fa04aa6 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: 4ef3324269b1 Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: ccba70678d4c Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: e4316b12b1af Layer already exists 0.3s

Pushing localhost:5002/test8-debug:arm64: e4316b12b1af Layer already exists 0.3s

![]() Pushing localhost:5002/test8-debug:arm64: b3dc529cad80 Layer already exists 0.6s

Pushing localhost:5002/test8-debug:arm64: b3dc529cad80 Layer already exists 0.6s

![]() Pushing localhost:5002/test8-debug:arm64: bb1f38e64961 Layer already exists 0.6s

Pushing localhost:5002/test8-debug:arm64: bb1f38e64961 Layer already exists 0.6s

![]() Pushing localhost:5002/test8-debug:arm64: ef5ab135b4e1 Layer already exists 0.6s

Pushing localhost:5002/test8-debug:arm64: ef5ab135b4e1 Layer already exists 0.6s

![]() Pushing localhost:5002/test8-debug:arm64: 0d44ca85e203 Layer already exists 0.6s

Pushing localhost:5002/test8-debug:arm64: 0d44ca85e203 Layer already exists 0.6s

![]() Pushing localhost:5002/test8-debug:arm64: cbfca7efdd64 Layer already exists 0.6s

Pushing localhost:5002/test8-debug:arm64: cbfca7efdd64 Layer already exists 0.6s

![]() Pushing localhost:5002/test8-debug:arm64: c5852bef3e08 Layer already exists 0.9s

Pushing localhost:5002/test8-debug:arm64: c5852bef3e08 Layer already exists 0.9s

![]() Pushing localhost:5002/test8-debug:arm64: 6cd757f87b26 Layer already exists 0.9s

Pushing localhost:5002/test8-debug:arm64: 6cd757f87b26 Layer already exists 0.9s

![]() Pushing localhost:5002/test8-debug:arm64: 84f02289282b Layer already exists 0.9s

Pushing localhost:5002/test8-debug:arm64: 84f02289282b Layer already exists 0.9s

![]() Pushing localhost:5002/test8-debug:arm64: db9633f880e7 Layer already exists 0.9s

Pushing localhost:5002/test8-debug:arm64: db9633f880e7 Layer already exists 0.9s

![]() Pushing localhost:5002/test8-debug:arm64: ab925bd8dc65 Layer already exists 0.9s

Pushing localhost:5002/test8-debug:arm64: ab925bd8dc65 Layer already exists 0.9s

![]() Pushing localhost:5002/test8-debug:arm64: 5f70bf18a086 Layer already exists 1.2s

Pushing localhost:5002/test8-debug:arm64: 5f70bf18a086 Layer already exists 1.2s

![]() Pushing localhost:5002/test8-debug:arm64: ac6442dc9f87 Layer already exists 1.2s

Pushing localhost:5002/test8-debug:arm64: ac6442dc9f87 Layer already exists 1.2s

![]() Pushing localhost:5002/test8-debug:arm64: 169ce75e923c Layer already exists 1.2s

Pushing localhost:5002/test8-debug:arm64: 169ce75e923c Layer already exists 1.2s

![]() Pushing localhost:5002/test8-debug:arm64: 66a307619b8c Layer already exists 1.2s

Pushing localhost:5002/test8-debug:arm64: 66a307619b8c Layer already exists 1.2s

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: DOCKER_HOST=192.168.5.38:2375 LOCAL_REGISTRY=192.168.5.10 TAG=arm64 GPU=-imx8 DEBUG_SSH_PORT=6502 DEBUG_PORT1=6512 DEBUG_PORT2= DEBUG_PORT3= docker compose pull test8-debug

WARN[0000] The “DOCKER_LOGIN” variable is not set. Defaulting to a blank string.

[+] Pulling 1/1

![]() test8-debug Pulled 0.2s

test8-debug Pulled 0.2s

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: DOCKER_HOST=192.168.5.38:2375 LOCAL_REGISTRY=192.168.5.10 TAG=arm64 GPU=-imx8 DEBUG_SSH_PORT=6502 DEBUG_PORT1=6512 DEBUG_PORT2= DEBUG_PORT3= docker compose -p torizon up -d test8-debug

WARN[0000] The “DOCKER_LOGIN” variable is not set. Defaulting to a blank string.

WARN[0000] Found orphan containers ([torizon-pantilt-1]) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

[+] Running 1/1

![]() Container torizon-test8-debug-1 Started 0.9s

Container torizon-test8-debug-1 Started 0.9s

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no -p 6502 torizon@192.168.5.38 pkill python3 || true

Warning: Permanently added ‘[192.168.5.38]:6502’ (ED25519) to the list of known hosts.

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: rsync -P -av -e ssh -p 6502 -o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null /home/steve/test8/src torizon@192.168.5.38:/home/torizon/app/

Warning: Permanently added ‘[192.168.5.38]:6502’ (ED25519) to the list of known hosts.

sending incremental file list

src/

src/main.py

385 100% 0.00kB/s 0:00:00 (xfr#1, to-chk=0/2)

sent 528 bytes received 39 bytes 1,134.00 bytes/sec

total size is 385 speedup is 0.68

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no -p 6502 torizon@192.168.5.38 cd /home/torizon/app && . /home/torizon/app/.venv/bin/activate && screen -d -m python3 -m debugpy --listen 0.0.0.0:6512 --log-to src/log --wait-for-client src/main.py

Warning: Permanently added ‘[192.168.5.38]:6502’ (ED25519) to the list of known hosts.

-

Terminal will be reused by tasks, press any key to close it.

-

Executing task: ssh -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no -p 6502 torizon@192.168.5.38 ‘cT=0; while ! grep -r “: wait_for_client()” /home/torizon/app/src/log/debugpy.server*.log 2> /dev/null; do sleep 0.1; if [ “$cT” -eq 100 ]; then echo “Problem debugging main.py file”; break; fi; cT=$(expr $cT + 1); done’

Warning: Permanently added ‘[192.168.5.38]:6502’ (ED25519) to the list of known hosts.

D+00000.667: wait_for_client()

- Terminal will be reused by tasks, press any key to close it.

@eric.tx

Today I discovered there were some problems in the “build-container-image-sdk-xxxx” tasks, in the “problemMatcher” in the tasks.json file according to VSC. it had $gcc in all 3 of them, “arm”, “arm64” and “amd64”, but it showed the valid values and $gcc was not one of them.

[{

“resource”: “/home/steve/test8/.vscode/tasks.json”,

“owner”: “generated_diagnostic_collection_name#5”,

“code”: “1”,

“severity”: 4,

“message”: “Value is not accepted. Valid values: "$msCompile", "$lessCompile", "$gulp-tsc", "$jshint", "$jshint-stylish", "$eslint-compact", "$eslint-stylish", "$go", "$nvcc", "$lessc", "$node-sass", "$tsc", "$tsc-watch", "$python".”,

Not sure what would be correct there. $python?

Today, I tried changing the “wait-a-bit” from 1 to eventually 20 seconds. I got more consistent results can could continue to get it all the way into debug. However, if it stops at a breakpoint, it will wait, and then disconnect.

I can run the docker file continuously, manually, with no issues. So, it’s not like the image is dying on it’s own. Something is telling it to quit. I just don’t know what.

Hey @EvetsMostel1,

The issue you are having between powershell and Xonsh is most likely due to a mismatch between Which Torizon OS version you have for the templates vs the one you need. On windows and using WSL, if you are building for Torizon 6, you would need powershell. If you are on Torizon 7, you would need xonsh.

Another issue, is if you are building for Torizon 6, but have started new Torizon template projects, it will default to Torizon 7 templates. And there most likely would be issues here.

Does this seem like it would be the issue with what versions you are using?

-Eric

@eric.tx ,

I am using torizon 7.2/7.3 and xonsh is installed. I have basically been using that from when I started, and I did notice that the templates where updated, I believe on Wednesday last week. And at points I have tried starting new projects and using 24.04. This seems to get me (mostly) back to where I was originally with a python project where I can run the code on the local pc and step and breakpoints all work as expected.

However on Torizon on the IVY and Verdin IMX8MP, I can run the code, but if I put in a breakpoint, or put in a loop that lasts longer than about 5 seconds, the code it is stopped, and the container is closed. This is the issue I am trying to get around at the moment. However, I only get there because I put in the delay. Without the delay, it never gets to actually running and errors out with and error code of 255, which I believe is what would happen if the container is shut down.

Hey @EvetsMostel1,

Is this with the same code example you have shared prior? I tried this on the imx8mp + Verdin Dev board and it ran as expected with breakpoints. The code example doesn’t interact with anything really on the carrier board, so I don’t suspect the use of Ivy is a cause.

-Eric

@eric.tx

No, I don’t believe this is an IVY issue. I think it is an issue with the debugging setup in the template, specifically in the tasks.

For instance, you said that under version 7 of the torizon, it needs xonsh and not powershell. However, when I start VSC, it still runs powershell. Is that the normal thing, or am I misunderstanding?

It checks for xonsh, but also runs powershell at the startup. Here is the output in VSC:

[05-29 07:51:13.959] Activating Torizon IDE …

[05-29 07:51:13.960] Torizon IDE version :: 3.0.4

[05-29 07:51:16.662] Latest Torizon IDE published :: 3.0.119

[05-29 07:51:16.662] Telemetry is enabled

[05-29 07:51:16.662] Extension collects completely anonymous telemetry data about templates usage. Participation in this anonymous program is optional, and you may opt-out if you’d not like to share any information. Check: Settings - Data Collection | Toradex Developer Center

[05-29 07:51:16.662] Telemetry allows us to accurately gauge templates usage. This data will help us to focus better on the most used templates, adding new features and putting effort in the right place.

[05-29 07:51:16.662] Resolving host IP address …

[05-29 07:51:18.493] Host IP address OK

[05-29 07:51:18.505] Docker installed OK

[05-29 07:51:18.638] Docker running OK

[05-29 07:51:19.393] Docker compose OK

[05-29 07:51:19.524] PowerShell OK

[05-29 07:51:19.542] git OK

[05-29 07:51:19.557] dig OK

[05-29 07:51:19.566] avahi-resolve OK

[05-29 07:51:19.624] nmap OK

[05-29 07:51:19.632] iputils-ping OK

[05-29 07:51:19.733] file OK

[05-29 07:51:19.742] sshpass OK

[05-29 07:51:19.751] iproute2 OK

[05-29 07:51:19.760] rsync OK

[05-29 07:51:19.769] python3 OK

[05-29 07:51:19.777] pipx OK

[05-29 07:51:19.778] Cloning project templates

[05-29 07:51:19.778] Templates repo: GitHub - torizon/vscode-torizon-templates: VS Code Torizon Integrated Development Environment Templates

[05-29 07:51:19.778] Templates tag/hash: v3.0.4

[05-29 07:51:19.789] Project templates clone of branch OK

[05-29 07:51:20.320] Project templates fetched OK

[05-29 07:51:20.336] Checkout templates repo to tag v3.0.4 OK

[05-29 07:51:21.939] xonsh installation OK

[05-29 07:51:21.940] Checking connected devices …

[05-29 07:51:21.940] Scanning Torizon devices …

[05-29 07:51:21.951] Debian bookworm packages list load OK

[05-29 07:51:21.952] Debug id_rsa OK

[05-29 07:51:22.114] Checking host ip sanity …

[05-29 07:51:24.322] Torizon IDE extension activated

[05-29 07:51:24.491] Host ip is OK

[05-29 07:51:24.539] Device 192.168.5.38 set as Docker Host

@eric.tx ,

So in my current program, very similar to the one I gave you before, I have one extra print statement at the end, which is where I put a breakpoint. In the loop I only go to 100, so it usually finishes without issues and stops at the last line. All is well. Except, after about 2-3 seconds, it disconnects. Something is closing down the container, or it is disconnecting, and then the container is closed automatically, it is hard to tell which.

I saw that there was some “debug” built into the .xsh file so I tried turning that on but got nothing extra out that I can see. If I try to go to a longer loop, the debug output from the print statements stop before it gets to the end of the loop, which to me says that something is closing the container either because of a timeout, or it thinks the program is no longer running.

Is there some way to turn on extra output (debug or verbose) that would lead to some idea of what is happening?

Thanks,

Steve

@eric.tx ,

As of a little bit ago, I am now getting this error in VSC “Unable to write to Workspace Settings because docker.environment is not a registered configuration.”, When I try to set (click on “set default”) the current device as the default device. I still connects to it and does all the right things, but maybe there is something that different now. Nothing on the web has posted this error that I can find.

Hey,

This particular error is caused by Microsoft’s update to their legacy docker container. Switching to pre release torizon extension should solve this.

@eric.tx

OK, so I did that and now I don’t get the error and can debug again. However, the problem still remains that if I hit a breakpoint, or the program runs too long (~10 seconds?), the program just stops and the container goes away.

Hey @EvetsMostel1,

Are you able to test this on a new python project? I can’t seem to recreate the issue. Is the error that is showing related to the .zip file from your other ticket?

-Eric