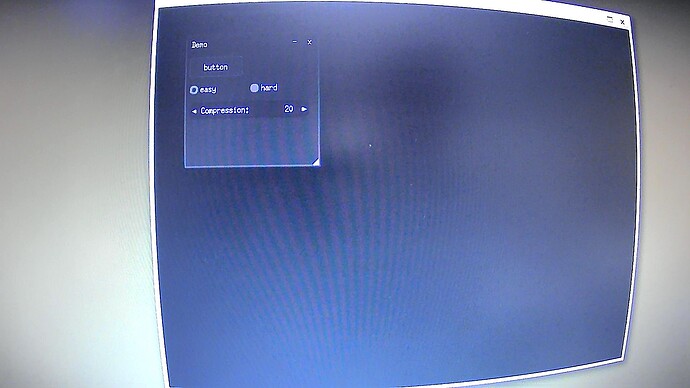

This one baffles me. With a little bit of tweaking to put source all in one directory, this works fine

https://github.com/Immediate-Mode-UI/Nuklear/tree/master/demo/x11

The app runs in an ugly terminal window instead of taking over the screen, but it runs.

The main.c file has this exact line at startup for both this working one and the x11 opengl example.

xw.dpy = XOpenDisplay(NULL);

Run the x11 this way.

docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=fred \

--net=host --cap-add CAP_SYS_TTY_CONFIG \

-v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ \

--device-cgroup-rule='c 4:* rmw' \

--device-cgroup-rule='c 13:* rmw' \

--device-cgroup-rule='c 199:* rmw' \

--device-cgroup-rule='c 226:* rmw' \

seasonedgeek/nuklear-x11-demo --developer weston-launch \

--tty=/dev/tty7 --user=torizon

docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=ethyl --user=torizon \

-v /dev/dri:/dev/dri -v /dev/galcore:/dev/galcore \

-v /tmp:/tmp \

--device-cgroup-rule='c 199:* rmw' \

--device-cgroup-rule='c 226:* rmw' \

seasonedgeek/nuklear-x11-demo launch-x11-demo

x11 opengl demo from here. Slightly tweaked so it would look for all source in same directory.

https://github.com/Immediate-Mode-UI/Nuklear/tree/master/demo/x11_opengl2

Built and run same way.

docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=fred \

--net=host --cap-add CAP_SYS_TTY_CONFIG \

-v /dev:/dev -v /tmp:/tmp -v /run/udev/:/run/udev/ \

--device-cgroup-rule='c 4:* rmw' \

--device-cgroup-rule='c 13:* rmw' \

--device-cgroup-rule='c 199:* rmw' \

--device-cgroup-rule='c 226:* rmw' \

seasonedgeek/nuklear-x11-opengl-demo --developer weston-launch \

--tty=/dev/tty7 --user=torizon

docker run -e ACCEPT_FSL_EULA=1 -d --rm --name=ethyl --user=torizon \

-v /dev/dri:/dev/dri -v /dev/galcore:/dev/galcore \

-v /tmp:/tmp \

--device-cgroup-rule='c 199:* rmw' \

--device-cgroup-rule='c 226:* rmw' \

seasonedgeek/nuklear-x11-opengl-demo launch-x11-opengl-demo

Fails to open display.

win.dpy = XOpenDisplay(NULL);

if (!win.dpy) die("Failed to open X display\n");

{

/* check glx version */

int glx_major, glx_minor;

if (!glXQueryVersion(win.dpy, &glx_major, &glx_minor))

die("[X11]: Error: Failed to query OpenGL version\n");

if ((glx_major == 1 && glx_minor < 3) || (glx_major < 1))

die("[X11]: Error: Invalid GLX version!\n");

}

It doesn’t even get to the checks below that. I’m building within the same VM where I build the working one.

Everything is looking for aarch64 as it should be.

root@verdin-imx8mp-06848973:/home/torizon# ldd demo

linux-vdso.so.1 (0x0000ffff93af1000)

libX11.so.6 => /usr/lib/aarch64-linux-gnu/libX11.so.6 (0x0000ffff93911000)

libm.so.6 => /lib/aarch64-linux-gnu/libm.so.6 (0x0000ffff93866000)

libGL.so.1 => /usr/lib/aarch64-linux-gnu/libGL.so.1 (0x0000ffff937ca000)

libc.so.6 => /lib/aarch64-linux-gnu/libc.so.6 (0x0000ffff93654000)

/lib/ld-linux-aarch64.so.1 (0x0000ffff93ac1000)

libxcb.so.1 => /usr/lib/aarch64-linux-gnu/libxcb.so.1 (0x0000ffff9361c000)

libdl.so.2 => /lib/aarch64-linux-gnu/libdl.so.2 (0x0000ffff93608000)

libGAL.so => /usr/lib/aarch64-linux-gnu/libGAL.so (0x0000ffff93445000)

libXdamage.so.1 => /usr/lib/aarch64-linux-gnu/libXdamage.so.1 (0x0000ffff93432000)

libXfixes.so.3 => /usr/lib/aarch64-linux-gnu/libXfixes.so.3 (0x0000ffff9341c000)

libXext.so.6 => /usr/lib/aarch64-linux-gnu/libXext.so.6 (0x0000ffff933f8000)

libpthread.so.0 => /lib/aarch64-linux-gnu/libpthread.so.0 (0x0000ffff933c7000)

libdrm.so.2 => /usr/lib/aarch64-linux-gnu/libdrm.so.2 (0x0000ffff933a4000)

libXau.so.6 => /usr/lib/aarch64-linux-gnu/libXau.so.6 (0x0000ffff93390000)

libXdmcp.so.6 => /usr/lib/aarch64-linux-gnu/libXdmcp.so.6 (0x0000ffff9337a000)

libbsd.so.0 => /usr/lib/aarch64-linux-gnu/libbsd.so.0 (0x0000ffff93355000)

libmd.so.0 => /usr/lib/aarch64-linux-gnu/libmd.so.0 (0x0000ffff93339000)

Does the Weston vivante container just not like version 2 of OpenGL eventhough it loads libraries for it?