If this is what you mean by adresses, I am using the predefined macros, so the addresses match the ones from your link. This is how they are defined in my code (I used the cmsis_ecspi_int_loopback_transfer example as a guide):

#define ECSPI1_TRANSFER_SIZE 8

#define ECSPI1_TRANSFER_BAUDRATE 2000000U

#define ECSPI1_MASTER_BASEADDR ECSPI1

#define ECSPI1_MASTER_CLK_FREQ \

(CLOCK_GetPllFreq(kCLOCK_SystemPll1Ctrl) / (CLOCK_GetRootPreDivider(kCLOCK_RootEcspi1)) / \

(CLOCK_GetRootPostDivider(kCLOCK_RootEcspi1)))

#define ECSPI1_MASTER_TRANSFER_CHANNEL kECSPI_Channel0

#define ECSPI2_TRANSFER_SIZE 64 // ECSPI uses 32 bit buffers that are sent at once, with 64bits, two uint32 are sent at once

#define ECSPI2_TRANSFER_BAUDRATE 2000000U

#define ECSPI2_MASTER_BASEADDR ECSPI2

#define ECSPI2_MASTER_CLK_FREQ \

(CLOCK_GetPllFreq(kCLOCK_SystemPll1Ctrl) / (CLOCK_GetRootPreDivider(kCLOCK_RootEcspi2)) / \

(CLOCK_GetRootPostDivider(kCLOCK_RootEcspi2)))

#define ECSPI2_MASTER_TRANSFER_CHANNEL kECSPI_Channel0

And these are the macros from the \devices\MIMX8MM6\MIMX8MM6_cm4.h and the actual addresses:

#define ECSPI1_BASE (0x30820000u)

/** Peripheral ECSPI1 base pointer */

#define ECSPI1 ((ECSPI_Type *)ECSPI1_BASE)

/** Peripheral ECSPI2 base address */

#define ECSPI2_BASE (0x30830000u)

/** Peripheral ECSPI2 base pointer */

#define ECSPI2 ((ECSPI_Type *)ECSPI2_BASE)

You are right. The ecspi2 keeps working, but the ecspi1 halts when the linux starts when I test them separately, but I don’t see why. Especially when the ecspi2 should be the one that’s enabled in linux and causing the interference and not the ecspi1.

Here’s some of the initialization code that I use that could maybe help you identify the problem:

//ECSPI1 config and init

CLOCK_SetRootMux(kCLOCK_RootEcspi1, kCLOCK_EcspiRootmuxSysPll1); /* Set ECSPI1 source to SYSTEM PLL1 800MHZ */

CLOCK_SetRootDivider(kCLOCK_RootEcspi1, 2U, 5U); /* Set root clock to 800MHZ / 10 = 80MHZ */

ECSPI_MasterGetDefaultConfig(&masterConfig1);

masterConfig1.baudRate_Bps = ECSPI1_TRANSFER_BAUDRATE;

masterConfig1.channel = kECSPI_Channel0;

masterConfig1.burstLength = 8;

//SS software controlled as GPIO

gpio_pin_config_t gpio_config1 = {kGPIO_DigitalOutput, 1, kGPIO_NoIntmode};

GPIO_PinInit(ECSPI1_CS_MUX_GPIO, ECSPI1_CS_MUX_GPIO_PIN, &gpio_config1);

ECSPI_MasterInit(ECSPI1_MASTER_BASEADDR, &masterConfig1, ECSPI1_MASTER_CLK_FREQ);

ECSPI_MasterTransferCreateHandle(ECSPI1_MASTER_BASEADDR, &g_m_handle1, ECSPI1_MasterUserCallback, NULL);

//ECSPI2 config and init

CLOCK_SetRootMux(kCLOCK_RootEcspi2, kCLOCK_EcspiRootmuxSysPll1); /* Set ECSPI2 source to SYSTEM PLL1 800MHZ */

CLOCK_SetRootDivider(kCLOCK_RootEcspi2, 2U, 5U); /* Set root clock to 800MHZ / 10 = 80MHZ */

ECSPI_MasterGetDefaultConfig(&masterConfig2);

masterConfig2.baudRate_Bps = ECSPI2_TRANSFER_BAUDRATE;

masterConfig2.channel = kECSPI_Channel0;

masterConfig2.burstLength = 64;

//SS software controlled as GPIO

gpio_pin_config_t gpio_config1 = {kGPIO_DigitalOutput, 1, kGPIO_NoIntmode};

GPIO_PinInit(ECSPI2_SS0_GPIO, ECSPI2_SS0_GPIO_PIN, &gpio_config1);

ECSPI_MasterInit(ECSPI2_MASTER_BASEADDR, &masterConfig2, ECSPI2_MASTER_CLK_FREQ);

ECSPI_MasterTransferCreateHandle(ECSPI2_MASTER_BASEADDR, &g_m_handle2, ECSPI2_MasterUserCallback, NULL);

It is also interesting that ECSPI1_MasterUserCallback function receives kStatus_Success even though that data is not transfered to the slave.

One more point regarding the CLOCK_SetRootMux. I wasn’t sure if both interfaces can use the same clock so firstly I tried using kCLOCK_EcspiRootmuxSysPll1 and kCLOCK_EcspiRootmuxSysPll3, but my tests showed that they can in fact both use the same clock apparently.

Please tell me if you need any further information in order to understand the problem.

Thanks in advance.

EDIT:

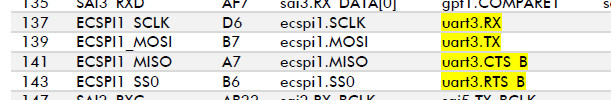

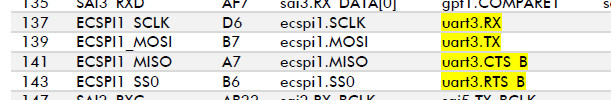

One other thing that crossed my mind is that those ecspi1 pins are by default muxed for uart3. I use pin muxing on M-Core to use them for spi, but it might be that linux tried using them as uart3 and blocks them? I should try and disable the uart3 in the device tree then I guess.