Hi. I’m trying to execute create-production-image for test purposes from VS CODE.

I’m using this c-project example:

https://developer.toradex.com/torizon/application-development/ide-extension/application-development-c-example/

I’m following this guide for create the production image:

https://developer.toradex.com/torizon/application-development/ide-extension/generating-production-images/#prepare-your-application-container-for-production

When I press the create-production-image task, I get this error output:

- Executing task: xonsh /home/boris/lwdir/devel/soft/torizon/c/gpioc/.conf/create-docker-compose-production.xsh /home/boris/lwdir/devel/soft/torizon/c/gpioc v0.0.0 gpioc

Executing task: run-torizon-binfmt <

Container Runtime: docker

Run Arguments: --rm --privileged torizon/binfmt:latest

Container Name: binfmt

Container does not exists. Starting …

Cmd: docker run --name binfmt --rm --privileged torizon/binfmt:latest

Rebuilding rodion82/gpioc:v0.0.0 …

Executing task: apply-torizon-packages-arm <

Applying torizonPackages.json:

Applying to Dockerfile.debug …

Dockerfile.debug

Applying to Dockerfile.sdk …

Dockerfile.sdk

Applying to Dockerfile …

Dockerfile

torizonPackages.json applied

Executing task: build-container-torizon-release-arm <

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 20.4s (14/15) docker:default

=> [gpioc internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 2.44kB 0.0s

=> WARN: InvalidDefaultArgInFrom: Default value for ARG torizon/cross-toolchain-${IMAGE_ARCH}:${CROSS_SDK_BASE_TAG} results in empty or invalid base image name (line 15) 0.0s

=> [gpioc internal] load metadata for docker.io/torizon/debian:4 3.9s

=> [gpioc internal] load metadata for docker.io/torizon/cross-toolchain-arm:4 0.7s

=> [gpioc internal] load .dockerignore 0.0s

=> => transferring context: 56B 0.0s

=> CACHED [gpioc build 1/6] FROM docker.io/torizon/cross-toolchain-arm:4@sha256:e55473f56bd83ac3eb4d5c900a1be70d2049fe3a3e67210790ca4bcb8f3ed7b3 0.0s

=> [gpioc deploy 1/4] FROM docker.io/torizon/debian:4@sha256:89e87a5ad78b8b7741e7209cab96fabdea1893bffe06b036ff3368d2e64063de 15.1s

=> => resolve docker.io/torizon/debian:4@sha256:89e87a5ad78b8b7741e7209cab96fabdea1893bffe06b036ff3368d2e64063de 0.1s

=> => sha256:6aafc35ac588733109bc0c55587676a69c7a13b7c2d7306cdb389c2db1299c9c 23.94MB / 23.94MB 5.4s

=> => sha256:964b77426128fdfd88ad74c6b52b3199708ff78b540cba07110121a70f46cc49 5.53MB / 5.53MB 4.4s

=> => sha256:a800063b17a2e4ef7df152037c1382b84e681abc0da31ecff1b4681ec79107f6 270B / 270B 2.5s

=> => sha256:89e87a5ad78b8b7741e7209cab96fabdea1893bffe06b036ff3368d2e64063de 2.38kB / 2.38kB 0.0s

=> => sha256:f5b235db6bbf37a9b7cca73ae8774a8e9f143c40a3747c293e23dc7146950cee 6.49kB / 6.49kB 0.0s

=> => sha256:9b8f3f923bc9df60e15af68edfd27ca8b6d50a3f2726931a3c0307baa32a85b2 2.56kB / 2.56kB 0.0s

=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 3.2s

=> => sha256:8f1d9fccd10e2a2fa4a5c2bf412412f162f56f487cfdb765d24300a0fa97d1f4 259B / 259B 4.6s

=> => sha256:b1b720b4a1d97ec509012bae116e7894f253cf46af0f1dd890d23e4266382c23 636B / 636B 4.9s

=> => sha256:081e7095ceaad3e74fe1be4f45ee1b74b5d51a8a181199208e2ee4da104fdd01 3.45kB / 3.45kB 5.7s

=> => sha256:ba56d0f4b83174adea37effb0145e35d9beb93c2a45bb1589247a42bfc440d4e 1.98kB / 1.98kB 6.0s

=> => sha256:4166ce5cedfcef6a2cf0ab8ec389b8b0657525178097d228a0eef3c1650a75ca 405B / 405B 6.5s

=> => extracting sha256:6aafc35ac588733109bc0c55587676a69c7a13b7c2d7306cdb389c2db1299c9c 5.4s

=> => sha256:ebd20dfafd08daf2400c46c6d277fa85a0a7dbaa4f331514196c6be836a79b8e 15.28MB / 15.28MB 9.0s

=> => sha256:ff9c0e18d25386de7f8b64d99c07fbb5352647263ed5da783ad07d72c854b486 1.16MB / 1.16MB 7.5s

=> => sha256:7b7f3ff35c6306713c9acf0cea647e86fb4b2e27181568cd60c88edc7cb57a57 172B / 172B 6.9s

=> => extracting sha256:964b77426128fdfd88ad74c6b52b3199708ff78b540cba07110121a70f46cc49 0.7s

=> => extracting sha256:a800063b17a2e4ef7df152037c1382b84e681abc0da31ecff1b4681ec79107f6 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> => extracting sha256:8f1d9fccd10e2a2fa4a5c2bf412412f162f56f487cfdb765d24300a0fa97d1f4 0.0s

=> => extracting sha256:b1b720b4a1d97ec509012bae116e7894f253cf46af0f1dd890d23e4266382c23 0.0s

=> => extracting sha256:081e7095ceaad3e74fe1be4f45ee1b74b5d51a8a181199208e2ee4da104fdd01 0.0s

=> => extracting sha256:ba56d0f4b83174adea37effb0145e35d9beb93c2a45bb1589247a42bfc440d4e 0.0s

=> => extracting sha256:4166ce5cedfcef6a2cf0ab8ec389b8b0657525178097d228a0eef3c1650a75ca 0.0s

=> => extracting sha256:ebd20dfafd08daf2400c46c6d277fa85a0a7dbaa4f331514196c6be836a79b8e 0.5s

=> => extracting sha256:ff9c0e18d25386de7f8b64d99c07fbb5352647263ed5da783ad07d72c854b486 0.1s

=> => extracting sha256:7b7f3ff35c6306713c9acf0cea647e86fb4b2e27181568cd60c88edc7cb57a57 0.0s

=> [gpioc build 2/6] RUN apt-get -q -y update && apt-get -q -y install libgpiod-dev:armhf && apt-get clean && apt-get autoremove && rm -rf /var/lib/a 14.3s

=> [gpioc internal] load build context 0.1s

=> => transferring context: 7.68kB 0.0s

=> [gpioc build 3/6] COPY . /home/torizon/app 0.3s

=> [gpioc build 4/6] WORKDIR /home/torizon/app 0.2s

=> [gpioc build 5/6] RUN rm -rf /home/torizon/app/build-arm 0.6s

=> CANCELED [gpioc deploy 2/4] RUN apt-get -y update && apt-get install -y --no-install-recommends libgpiod2:armhf && apt-get clean && apt-get autoremove && rm -rf / 1.2s

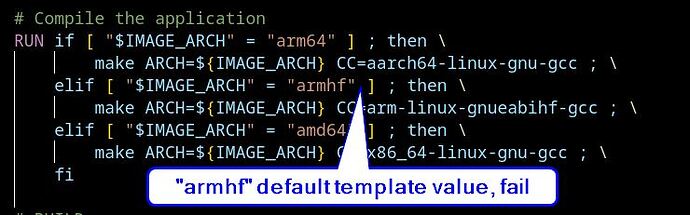

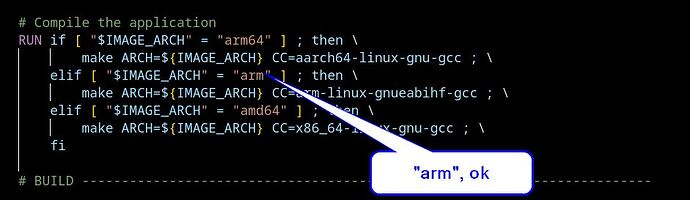

=> [gpioc build 6/6] RUN if [ “arm” = “arm64” ] ; then make ARCH=arm CC=aarch64-linux-gnu-gcc ; elif [ “arm” = “armhf” ] ; then make ARCH=arm CC=arm-l 0.7s

=> ERROR [gpioc deploy 3/4] COPY --from=build /home/torizon/app/build-arm/bin /home/torizon/app 0.0s

[gpioc deploy 3/4] COPY --from=build /home/torizon/app/build-arm/bin /home/torizon/app:

failed to solve: failed to compute cache key: failed to calculate checksum of ref b354cafd-74a5-4a05-883c-96b208db81b3::u775cbe13exr4dfwie8r25vg6: “/home/torizon/app/build-arm/bin”: not found

TASK [build-container-torizon-release-arm] exited with error code [1] <

![]() Error: RuntimeError(‘Error running task: build-container-torizon-release-arm’)

Error: RuntimeError(‘Error running task: build-container-torizon-release-arm’)

Error cause: Task execution error

xonsh: To log full traceback to a file set: $XONSH_TRACEBACK_LOGFILE =

Traceback (most recent call last):

File “/home/boris/lwdir/devel/soft/torizon/c/gpioc/.conf/create-docker-compose-production.xsh”, line 169, in

xonsh ./.vscode/tasks.xsh run @(f"build-container-torizon-release-{_image_arch}")

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/built_ins.py”, line 220, in subproc_captured_hiddenobject

return xonsh.procs.specs.run_subproc(cmds, captured=“hiddenobject”, envs=envs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/procs/specs.py”, line 1107, in run_subproc

return _run_specs(specs, cmds)

^^^^^^^^^^^^^^^^^^^^^^^

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/procs/specs.py”, line 1153, in _run_specs

cp.end()

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/procs/pipelines.py”, line 481, in end

self._end(tee_output=tee_output)

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/procs/pipelines.py”, line 500, in _end

self._raise_subproc_error()

File “/home/boris/.local/pipx/venvs/xonsh/lib/python3.11/site-packages/xonsh/procs/pipelines.py”, line 646, in _raise_subproc_error

raise subprocess.CalledProcessError(rtn, spec.args, output=self.output)

subprocess.CalledProcessError: Command ‘[‘xonsh’, ‘./.vscode/tasks.xsh’, ‘run’, ‘build-container-torizon-release-arm’]’ returned non-zero exit status 54.

- The terminal process “xonsh ‘/home/boris/lwdir/devel/soft/torizon/c/gpioc/.conf/create-docker-compose-production.xsh’, ‘/home/boris/lwdir/devel/soft/torizon/c/gpioc’, ‘v0.0.0’, ‘gpioc’, ‘’” terminated with exit code: 1.

- Terminal will be reused by tasks, press any key to close it.

I’m using VS VODE on Debian 12.10.

Thanks in advance.